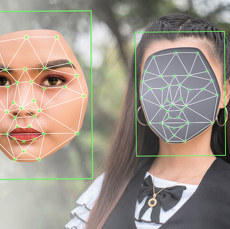

Deep fakes happen to be a coined term that has been in use since 2017 to refer to heinous or genius techniques used to superimpose a person’s face onto another’s body, especially Pornstars. This is done by combining existing images and videos onto source images or videos using a machine learning technique called a generative adversarial network. As a result, fakes or rather unreal and unconsented porn videos, fake news among other malicious hoaxes are created. With the main societal concern is whether people can actually be able to distinguish real from fake.

The history of Deepfakes

Deepfakes all started with an anonymous Redditor known as “deepfakes” started uploading videos of celebrities faces on porn actors bodies. The technology used involved tools that could insert a face into existing footage, frame by frame a possibly glitchy process that has evolved to featuring political figures, TV personalities, and now anyone.

Unfortunately, celebrities were the easiest targets, especially because the technology required more than a few images. However, currently, the underlying deepfakes software technology is getting hotter and more effective for companies working on augmented reality like Samsung and Google.

There has been a release on a breakthrough in controlling depth perception in video footages, which is the easiest way to distinguish real videos from fakes. With the most saddening bit being that people have not been sensitized enough and probably 70% of the world cannot separate a deep fake from a real clip.

Samsung researchers have recently also pointed towards creating avatars for games as well as video conferences. This avatar technology only requires a few images to be achieved which is basically the most recent advancement leading to the generation of full-body avatars.

Are Deepfakes obvious?

While various personalities, for instance, Hwang have projected doubts over claims that deep fakes technological advancements will in time make them indistinguishable, the real question is just how much damage will the victims who at this point might be you or I have incurred before it is actually proven to be a fake?

Well, there has been concerns already about people frustrating others through threats to leak their fakes already. And judging from the costs reduction, better equipped and skilled individuals deep fakes might affect lots of individuals in the future.

The worrying factor is that there is no fake detecting software available just yet, leave alone the fact that most people believe what they see on the media or social platforms, say for example the Belgium climate fake comment from Trump or the satirical Pelosi video. All of which raising the question of what action should be taken thereafter with Pelosi himself claiming that “If it seems clearly intended to defraud the listener to think something pejorative, it seems obvious to take it down,” he says. “But then once you go down that path, you fall into a line-drawing dilemma.”

Deepfakes 2019 & public concerns

Thing is; technology doesn’t just vanish into thin air because someone somewhere wishes it does. And what actually happens is like just like a conceived babe it continues to grow through one generation to the next configuring itself with other technological advancements whether in the form of software or hardware. No wonder Samsung has recently developed an easier way to fake that does not require too much imagery meaning that your Facebook profiles might actually be more than enough to get you dancing nude, fucking or getting fucked across the internet ASAP!

There are concerns of course with most sites including Pornhub and Reddit banning fakes from their sites on the grounds that it is porn whose owner’s have not consented to viewership. The biggest fear yet being that this technology could actually be used to undermine democracy say for example a public figure, perhaps imitating a past motion support clip in the form of a grainy cell phone video so that imperfections are overlooked, and timed for the right political heat to shape the public’s opinion. And with no found solution until now ahead of 2020 elections political leaders have called for legislation banning their “malicious use.”

According to Robert Chesney, a professor of law at the University of Texas, however, political disruption does not even require cutting-edge technology, but it could even result from lower-quality stuff, driven towards sowing discord, but not necessarily to fool. The most practical example being, the three-minute clip of House Speaker Nancy Pelosi circulating on Facebook, appearing to show her drunkenly slurring her words in public, which clearly displays a lack of ethics.

By reducing the number of photos required, Samsung’s method might mean even bigger problems because it’s actually more real. However, with the cost implications and time required might be a little complicated. As Chesney says, “Some people might have felt a little insulated by the anonymity of not having much video or photographic evidence online.” Called “few-shot learning,” unfortunately for them, the technology will be able to surpass. Perhaps because it does most of the heavy computational lifting ahead of time. Rather than being tried, like the Trump-specific footage, this system is fed a far larger amount of video that includes diverse people, with the idea being based on the fact that the system will learn the basic contours of human heads and facial expressions. And from there, the neural network can apply what it knows to manipulate a given face based on only a few photos, for instance, as in the case of the Mona Lisa.

This technique is actually similar to a few more methods that have revolutionized the functionality of neural networks that assist users to learn stuff like language, with massive data sets that teach them generalizable principles. That has in return given rise to models such as OpenAI’s GPT-2, which crafts written language very fluently to the extent that its creators decided against releasing it, out of their own reservations or should I say the fear of it being used to relay fake news.

At present, if it were adapted for malicious use, this particular strain of chicanery would be easy to spot, according to Siwei Lyu, a professor at the State University of New York at Albany who studies deepfake forensics under Darpa’s program. The rather impressive demo, misses finer details, like the famous Marilyn Monroe’s mole, which vanishes into the thin air as she throws her head back to laugh. The researchers also haven’t addressed other countless hitches including; how to properly sync audios to the deepfake or how to iron out glitchy backgrounds. And although Lyu has bridged the second challenge. On a video fusing Obama’s face onto an impersonator singing Pharrell Williams’ “Happy.” The Albany researchers claim that they will not release the method for everyone’s safety.

If you asked me, I would say that this is enough proof that deepfakes will stop at nothing, not today or in the coming future. And the reality is that deepfakes are present in 2019, getting more realistic and easier to go around thanks to advancements in technology and softwares from companies such as Samsung.

PornDude’s final words

Deepfakes 2019 seem to be coming at everyone pretty strong with realer clips that may actually insinuate, criminalize or even demoralize anyone. With the bitter truth being that in as much as we love celebrity porn fakes tomorrow, we might lose our jobs due to our fake sex tapes.

- Brazzers

- BangBros

- Reality Kings

- SpiceVids

- I Know That Girl

- PornHub

- XVideos

- xHamster

- XNXX

- Eporner

- CamSoda

- Cams.com

- StripChat

- LiveJasmin

- Streamate

- Nutaku

- Hentai Heroes

- Cunt Wars

- Pornstar Harem

- LifeSelector

- Candy.ai

- AngelGF

- Undress AI

- GirlfriendGPT

- Tingo

- PornGen.art

- Undress App

- Bare Club

- Penly Undress

- ClothOff

- BetOnline

- BetUS

- BC Game

- MyBookie

- SlotoCash

- F95zone

- PornGamesHub

- GamCore

- FAP Nation

- LewdZone

- Fapello

- SimpCity

- Coomer Party

- EroThots

- Influencers Gone Wild

- Luna

- Riley

- Eva Elfie

- Reislin

- MelRose Michaels

- Fansly

- MyClub

- FanCentro

- LoyalFans

- LoverFans

- Eva Elfie

- Reislin

- MelRose Michaels

- Emily Belmont

- Yumi Banks

- FikFap

- FYPTT

- XXXTik

- Tik Porn

- xfree.com

- AdultFriendFinder

- Ashley Madison

- Fling.com

- FuckBook

- BeNaughty

- VRPorn

- POVR

- VRBangers

- Naughty America VR

- VirtualRealPorn

- MissAV

- JavFinder

- Avgle

- SupJav

- VJAV

- JAVHD

- Erito

- JapanHDV

- Asian Sex Diary

- Zenra

- StripChat Asian

- LiveSexAsian

- SakuraLive

- Streamate Asian

- AsianBabeCams

- YourPorn

- HQporner

- PornGo

- PornTrex

- Eporner

- AdultTime

- HotMovies

- Adult Empire

- AEBN

- SpiceVids

- CamWhores

- Recurbate

- ArchiveBate

- CamBro

- LiveCamRips

- StripChat

- Chaturbate

- MyFreeCams

- BongaCams

- CamSoda

- CooMeet

- Flingster

- ChatRandom

- LuckyCrush

- Candy.ai

- Candy.ai

- AngelGF

- GirlfriendGPT

- DreamGF

- PepHop AI

- SkyPrivate

- NiteFlirt

- TalkToMe

- BabeStation

- Phone Darlings

- iXXX

- TubeSafari

- Thumbzilla

- Fuq

- PornKai

- NoodleMagazine

- NudeVista

- PornMD

- Xfantazy

- FindTubes

- PornHub Amateur

- xHamster Amateur

- XVideos Amateur

- Motherless Amateur

- EroProfile

- PornDudeCasting

- Exploited College Girls

- NetVideoGirls

- True Amateurs

- Backroom Casting Couch

- Hanime.tv

- Hentai Haven

- Rule34Video

- AnimeIDHentai

- Hentai.tv

- Rule 34

- Kemono Party

- E-Hentai

- E621

- FurAffinity

- HentaiPros

- eHentai.ai

- Hentaied

- HentaiKey

- FapHouse Hentai

- nHentai

- HentaiFox

- Hentai2Read

- Hitomi.la

- IMHentai

- DLsite

- DMM Doujin

- Coolmic

- Moekyun

- DoujinSpot

- ManyToon

- ManhwaHentai

- MangaHentai.me

- Manhwa68

- Hentai Webtoon

- MultPorn

- AllPornComic

- 8Muses

- ILikeComix

- MyHentaiGallery

- JABComix

- Welcomix

- BotComics

- Savita Bhabhi (Kirtu)

- CrazyXXX3DWorld

- XVideos Cartoon

- PornHub Cartoon

- xHamster Cartoon

- CartoonPornVideos

- Slushe

- Animatria

- AdultTime Animation

- FapHouse 3D

- AgentRedGirl

- 3DXTube

- Rule34Video

- PorCore

- SFMCompile

- NSFW Rule 34

- Naughty Machinima

- TabooFlix

- Motherless Taboo

- MilfNut

- Family Porn

- TabooPorns

- PureTaboo

- Family Strokes

- Freeuse Fantasy

- PervMom

- SisLovesMe

- BedPage

- Euro Girls Escort

- SkipTheGames

- Eros Guide

- Slixa

- GoneWild

- NSFW

- Reddit NSFW GIF

- Reddit Real Girls

- Reddit Barely Legal Teens

- PornDude Twitter

- Brazzers Twitter

- PornHub Twitter

- Mia Malkova Twitter

- Amouranth

- CrazyShit

- DarknessPorn

- XVideos Horror Porn

- PornHub Horror

- XNXX Horror

- Horror Porn

- Parasited

- Freeze

- XFreax

- Shoplyfter

- PornHub Indian

- MasalaSeen

- FSIBlog

- Desivdo

- AAGmaal

- Fuck My Indian GF

- DesiPapa

- IndianHiddenCams

- Real Indian Sex Scandals

- Horny Lily

- StripChat Indian

- DSCGirls

- LiveDosti

- ImLive Indian

- PornHub Black

- ShesFreaky

- xHamster Black

- XVideos Black

- BaddieHub

- Brown Bunnies

- AllBlackX

- The Habib Show

- Black Valley Girls

- African Casting

- StripChat Ebony

- LiveJasmin Ebony

- BongaCams Ebony

- Streamate Ebony

- CamSoda Ebony

- XVideos Interracial

- PornHub Interracial

- xHamster Interracial

- Eporner Interracial

- PornTrex Interracial

- Blacked

- Blacked Raw

- Dogfart Network

- DarkX

- BlackAmbush

- PornHub Cuckold

- xHamster Cuckold

- XVideos Cuckold

- Motherless Cuckold

- SpankBang Cuckold

- HotwifeXXX

- TouchMyWife

- Cuckold Sessions

- Do The Wife

- FapHouse Cuckold

- PornHub Arab

- xHamster Arab

- XVideos Arab

- Motherless Arab

- ArabySexy

- FapHouse Arab

- HotMovies Middle East

- Mia Khalifa

- Hijab Hookup

- SexWithMuslims

- StripChat Arab

- XLoveCam Arabian

- Flirt4Free Arabian

- Xpaja

- Porno Carioca

- PornHub Latina

- xHamster Latina

- XVideos Latina

- SexMex

- FapHouse Latina

- HotMovies Latina

- Teste De Fudelidade

- MamacitaZ

- StripChat Latina

- LiveJasmin Latina

- Streamate Latina

- BongaCams Latina

- Cam4 Latina

- Heavy R

- BoundHub

- ThisVid

- HypnoTube

- HeavyFetish

- Kink

- Clips4Sale

- LoveHerFeet

- PascalsSubSluts

- Assylum

- FetishFix

- StripChat Fetishes

- Streamate Bondage

- FetishGalaxy

- SkyPrivate BDSM

- PornHub Lesbian

- XVideos Lesbian

- xHamster Lesbian

- SxyPrn Lesbian

- NoodleMagazine Lesbian

- AdultTime Lesbian

- GirlsWay

- GirlGirl

- Twistys

- SLAYED

- AShemaleTube

- BeMyHole

- TrannyTube

- TrannyVideosXXX

- Shemale6

- TransAngels

- AdultTime Trans

- XVideos Red Trans

- Transsensual

- LadyboyGold

- StripChat Trans

- MyTrannyCams

- TSMate

- BongaCams Trans

- CamSoda Trans

- SxyPrn Anal

- PornTrex Anal

- HQporner Anal

- Eporner Anal

- PornHub Anal

- Evil Angel

- Tushy

- TrueAnal

- Anal Vids

- HotMovies Anal

- StripChat Anal

- LiveJasmin Anal

- Streamate Anal

- BongaCams Anal

- CamSoda Anal

- ThisVid Scat

- Scat Gold

- Motherless Scat

- PooPeeGirls

- Xpee

- SG-Video

- YezzClips

- ScatBook

- Piss Vids

- Scatsy

- StripChat Squirt

- LiveJasmin Squirt

- BongaCams Squirt

- CamSoda Squirt

- Cam4 Squirt

- SxyPrn Teen

- PornHub Teen

- XVideos Teen

- xHamster Teen

- PornTrex Teen

- TeamSkeet

- Tiny4K

- TeenFidelity

- ExxxtraSmall

- HotMovies Teen

- StripChat Teen

- LiveJasmin Teen

- Streamate Teen

- CamSoda Teen

- Cam4 Teen

- SxyPrn MILF

- Mature Tube

- Milf300

- MilfNut

- PornTrex MILF

- Naughty America

- MYLF

- Mature.nl

- AdultTime MILF

- Milfed

- StripChat Mature

- MaturesCam

- Streamate Mature

- CamSoda MILF

- BongaCams Mature

- xHamster Granny

- XVideos Granny

- PornHub Granny

- Mature Tube

- SxyPrn Granny

- PervNana

- Not My Grandpa

- Lusty Grandmas

- Age And Beauty

- Old Goes Young

- Streamate Granny

- StripChat Granny

- XVideos Big Tits

- PornHub Big Tits

- xHamster Big Tits

- XNXX Big Tits

- Eporner Big Tits

- ScoreLand

- BustyWorld

- NF Busty

- Pinup Files

- HotMovies Big Tits

- TubePornClassic

- xHamster Vintage

- XVideos Vintage

- PornHub Vintage

- Film1k

- The Classic Porn

- FapHouse Vintage

- HotMovies Classics

- Playboy Magazines

- Vintage Cuties

- XVideos Blowjob

- PornHub Blowjob

- xHamster Blowjob

- Eporner Blowjob

- SxyPrn Blowjob

- Gloryhole Secrets

- Swallowed

- Gloryhole Swallow

- BlowPass

- Fellatio Japan

- PornHub Facials

- XVideos Facials

- xHamster Bukkake

- SpankBang Bukkake

- Eporner Bukkake

- FapHouse Bukkake

- Sperm Mania

- Facials4K

- German Goo Girls

- Cum Blast City

- PornHub Handjob

- XVideos Handjob

- xHamster Handjob

- XNXX Handjob

- SxyPrn Handjob

- TugPass

- PureCFNM

- Handjob Japan

- FapHouse Handjobs

- HotMovies Handjob

- PornHub Creampie

- xHamster Creampie

- XVideos Creampie

- Eporner Creampie

- PornTrex Creampie

- Cum4K

- Gangbang Creampie

- GirlCum

- BBC Pie

- Sperm Mania

- XVideos Voyeur

- PornHub Voyeur

- xHamster Voyeur

- iXXX Voyeur

- Aloha Tube Voyeur

- HotMovies Voyeur

- FapHouse Public Sex

- NannySpy

- Bathroom Creepers

- Drone Porn

- RealLifeCam

- Voyeur House TV

- WareHouse X

- Voyeur House

- CamSoda Voyeur

- XVideos Gangbang

- PornHub GangBang

- xHamster GangBang

- SpankBang GangBang

- Eporner GangBang

- FapHouse Orgy

- Gangbang Creampie

- BFFS

- Dancing Bear

- Angels Love

- PornHub POV

- XVideos POV

- xHamster POV

- Eporner POV

- SxyPrn POV

- POVD

- MrLuckyPOV

- Jays POV

- RawAttack

- HotMovies POV

- PornHub BBW

- XVideos BBW

- xHamster BBW

- XNXX BBW

- Eporner BBW

- XL Girls

- Jeffs Models

- Pure BBW

- BBW Highway

- HotMovies BBW

- StripChat BBW

- LiveJasmin BBW

- Streamate BBW

- BongaCams BBW

- CamSoda BBW

- Sex.com

- PornPics

- ImageFap

- PornHub Pics

- xHamster Pics

- MetArt

- Watch4Beauty

- Femjoy

- FTV Girls

- StasyQ

- RedGIFs

- Sex.com Gifs

- PornHub Gifs

- NSFWMonster

- GifHQ

- TheFappeningBlog

- Celeb Jihad

- Social Media Girls

- AZNude

- CelebGate

- BannedSextapes

- Mr Skin

- Vivid Celeb

- HotMovies Celebrities

- AEBN Celebrities

- MrDeepFakes

- CFake

- AdultDeepFakes

- Sex Celebrity

- DeepFakePorn

- SocialMediaGirls Forum

- Phun Forum

- TitsInTops Forum

- Vintage Erotica Forums

- F95zone

- eFukt

- Inhumanity

- HumorOn

- 9GAG NSFW

- DaftPorn

- Digital PlayGround Parodies

- FapHouse Parody

- HotMovies Parodies

- Adult Empire Parody

- AEBN Parody

- Literotica

- Nifty Stories

- ASSTR

- XNXX Sex Stories

- The Kristen Archives

- PornHub ASMR

- XVideos ASMR

- Japanese ASMR

- NoodleDude

- SpankBang ASMR

- FapHouse ASMR

- DLsite ASMR

- RPLAY

- ASMR Fantasy

- Eraudica

- Bellesa

- PornHub PornForWomen

- ForHerTube

- xHamster PornForWomen

- SxyPrn Art Porn

- BellesaPlus

- SexArt

- Hot Guys Fuck

- Lustery

- Joymii

- XVideos Masturbation

- PornHub Masturbation

- xHamster Masturbation

- Motherless Masturbation

- PornTrex Masturbation

- Nubiles

- FTV Girls

- Anilos

- MetArtX

- FapHouse Masturbation

- IAFD

- FreeOnes

- JavLibrary

- Boobpedia

- AdultDVDTalk

- MyGaySites

- BedPage

- Hot.com

- RubRankings

- TSMasseur

- MassagePlanet

- AcmeJoy

- BestVibe

- Adam & Eve Store

- Fleshlight

- Lovense

- Joy Love Dolls

- Rosemary Doll

- Tantaly

- LoveNestle

- BestRealDoll

- BlueChew

- VigRX Plus

- Semenax

- Best Pill Service

- SemEnhance

- PantyDeal

- Sofia Gray

- Scented Pansy

- Kanojo Toys Used Panties

- AllThingsWorn

- Hentai Sites

- BestPornGames.com

- MyGaySites

- Porn Webmasters

- Porn Webmasters

- PornDudeCash

- Express VPN

- Hide.me

- ZoogVPN

- Planet VPN

- VPN Sites